Apache Kafka is a high-performance, resilient, open source-based, event-driven, pub-sub messaging application for providing loosely coupled connections between a variety of message producers and consumers. If that sentence seems like a whole bunch of confusing jargon, read on! In this post, I’ll introduce the basics of Kafka and why it can be so valuable.

At the recent COMMON conference in St Louis, many IBM i users were looking to learn about Kafka for their IBM i. They needed highly responsive (very fast), reliable ways of connecting their existing IBM i applications to eCommerce applications, supply chain APIs, financial transaction processors, applications on Windows/LINUX/UNIX platforms, and a wide variety of other use cases. They had heard that Kafka was an excellent approach to addressing those requirements.

According to the Apache Software Foundation, more than 80% of the Fortune 500 use Kafka. It is also used at many small to medium size businesses to ensure users get the best possible response time when accessing their applications and handle the rapidly increasing volume of machine-to-machine communications.

So why use Kafka? The answer includes:

• User Experience Performance: millisecond response time even for large numbers of simultaneous requests

• Capacity to handle high volumes of requests: process billions of transactions per day

• Resilience: replicated servers ensure your systems never go down

• Simple maintenance: no need to build and maintain multiple application-to-application integrations

Let’s look at a sample Kafka eCommerce use case:

Many customers are using Kafka to integrate their eCommerce systems. They want real-time sharing of transaction data among a variety of systems. For example:

Let’s say I am selling my products through my eCommerce website. I might want to take several actions when a customer is preparing to place an order (there certainly could be many more than these):

1. Check the customer’s credit availability

2. Check inventory for product availability

3. Reserve the items in the order in my IBM i inventory immediately, so the same inventory is not sold more than once

4. Get a shipping quote

5. Generate a price quote

The traditional way to do this would be to write several direct integrations.

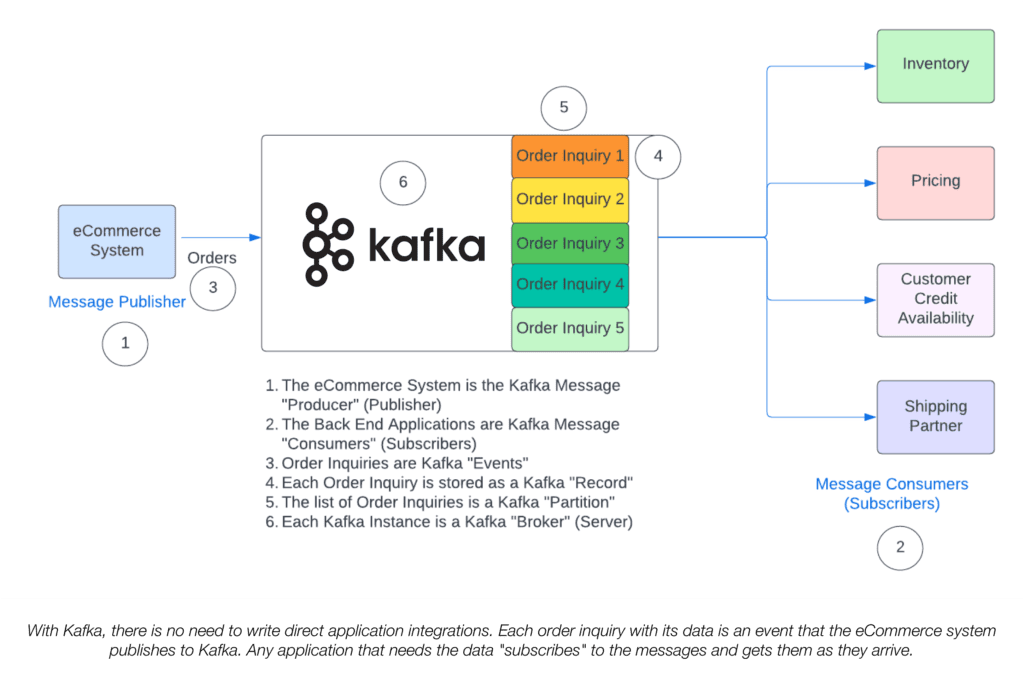

With Kafka, you would avoid creating these direct integrations. The eCommerce system would simply publish each order inquiry to Kafka, and Kafka would make those records available to each subscribing application. That kind of loosely-coupled architecture eliminates the need to create multiple individual integrations. It allows you to maintain the eCommerce and back-end applications separately without worrying about breaking the connection. This is how this same eCommerce integration would look in a Kafka environment:

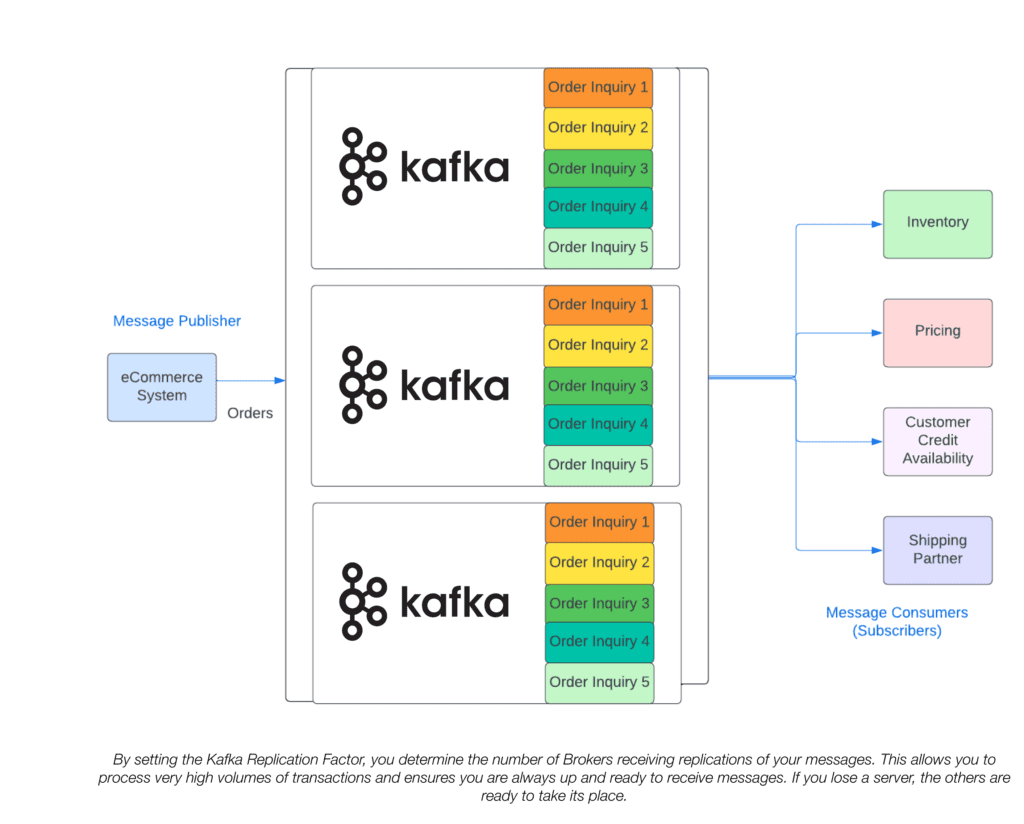

Kafka can automatically replicate the incoming messages onto multiple Kafka brokers. This protects you from losing data if a Kafka server goes down. And since you can have these replicated brokers running on different systems, it also provides you with practically unlimited scale in the volume of messages you can handle. There are Kafka users who are handling billions of messages per day. (LinkedIn, the original developers of Kafka, recently surpassed 7 trillion messages daily.)

So, the advantages of using Kafka are:

1. It is extremely fast

2. It can support many to many application connections without writing multiple integrations

3. Kafka connections are easy to maintain

4. It protects you from unexpected downtime

5. It can handle extremely high volumes of requests

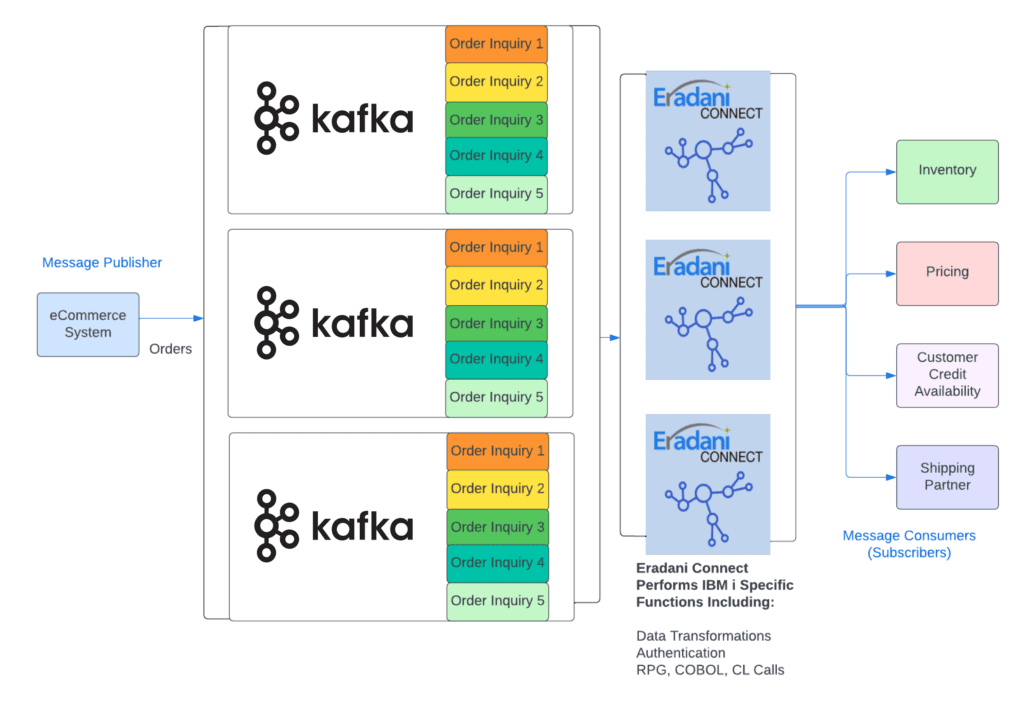

There is still one challenge to using Kafka on the IBM i. How do I get the messages from Kafka to and from formats that the IBM i understands? To avoid losing all the performance and reliability advantages of Kafka, you need something scalable, resilient, and can perform data transformations at the speed of Kafka.

The good news is that Eradani Connect is designed to do just that. Eradani Connect can perform high-speed data transformations and the micro batching of transactions while matching the replication of Kafka without placing a considerable processing load on your IBM i.

Without Kafka and Eradani Connect, your IT staff must build and maintain many individual system-to-system integrations. When you make a change, you must update all of the integrations. Programmers have to write the code to secure your APIs and translate open source message formats like JSON, XML, comma delimited files, and others to formats the IBM i understands. With Kafka and Eradani Connect, most of that work is done for you.

To find out more about how Eradani Connect can help you implement an advanced, high-performance Kafka environment at your organization, contact us at info@eradani.com or through our website at https://eradani.com/contact-us.